1 Non-classical Logic

There are no non-experienced truths – L.E.J. Brouwer

1.1 Intuitionistic Logic

Idea: a proof of is a “procedure”

that comments a proof of

into a proof of .

In particular, is not

always the same as .

Fact: The law of excluded middle ()

is not generally intuitionistically valid.

Moreover, the Axiom of Choice is incompatible with intuitionistic set theory.

We take choice to mean that any family of inhabited sets admits a choice function.

Theorem 1.1.1 (Diaconescu).

The law of excluded middle can be intuitionistically deduced from the Axiom

of Choice.

Proof.

Let

be a proposition. By the Axiom of Separation, the following are sets (i.e. we can construct a proof that they

are sets):

|

|

As

and ,

we have that

is a family of inhabited sets, thus admits a choice function

by the Axiom of Choice. This satisfies

and

by definition.

Thus we have

and .

Now

means that

and similarly for .

We can have the following:

-

(1)

We have a proof of ,

so

has a proof, so we must have a proof of .

-

(2)

We have a proof of ,

which similarly gives a proof of .

-

(3)

We have

and ,

in which case we can prove :

given a proof of ,

we can prove that

(by Extensionality), in which case ,

a contradiction.

So we can always specify a proof of

or a proof of

or a proof of .

□

Why bother?

-

Intuitionistic maths is more general: we assume less.

-

Several notions that are conflated in classical maths are genuinely different constructively.

-

Intuitionistic proofs have a computable content that may be absent in classical proofs.

-

Intuitionistic logic is the internal logic of an arbitrary topos.

Let’s try to formalise the BHK interpretation of logic.

We will inductively define a provability relation by enforcing rules that implement the BHK

interpretation.

We will use the notation to

mean that is a consequence

of the formulae in the set .

Rules for Intuitionistic Propositional Calculus (IPC)

-

(-I)

-

(-I)

,

-

(-E)

and

-

(-E)

-

(-I)

-

(-E)

-

(-E)

for any

-

(Ax)

for any

-

(Weak)

-

(Contr)

We obtain classical propositional logic (CPC) by adding either:

By

we mean ‘if we can prove

assuming and we

can prove assuming

, then we can infer

by “discharging / closing”

the open assumptions

and ’.

In particular, the (-I)-rule

can be written as

We obtain intiuitionistic first-order logic (IQC) by adding rules for quantification:

-

(-I)

,

where

is a term.

-

(-E)

,

if

is not free in .

-

(-I)

if

is not free in .

-

(-E)

,

where

is a term.

Example 1.1.2.

Let’s give a natural deduction proof of

.

|

|

Example 1.1.3.

Let’s prove the Hilbert-style axioms

and

.

| |

(toE) |

|

|

| |

(toE) |

|

|

| |

(toI,ψ) |

|

|

| | (toI, φ→ψ) |

|

|

| |

(toI, (φ→(ψ→χ))) |

|

|

| |

If is a set of propositions

in the language and is a

proposition, we write ,

,

,

, if there is a

proof of

from in

the respective logic.

Lemma 1.1.4.

Assuming that:

Then . Moreover,

if

is a primitive

proposition and

is any proposition, then

Proof.

Induction over the size of proofs. □

1.2 The simply typed -calculus

For now we assume given a set

of simple types generated by a grammar

where is a countable set of type

variables, as well as an infinite set

of variables.

Definition 1.2.1 (Simply typed lambda-term).

The set

of simply

typed -terms

is defined by the grammar

|

|

A context is a set of pairs

where the

are (distinct) variables and each .

We write

for the set of all possible contexts. Given a context ,

we also write

for the context

(if

does not appear in ).

The domain of

is the set of variables that occur in it, and the range

is the set of types that it manifests.

Definition 1.2.2 (Typability relation).

We define the typability relation

via:

-

(1)

For every

context ,

and variable

not occurring in

,

and

type ,

we have

.

-

(2)

Let

be a

context,

a variable not occurring in

,

and let

be

types, and

be a

-term.

If

,

then

.

-

(3)

Let

be a context,

be types, and

be

terms. If

and

,

then

.

A variable occurring

in a -abstraction

is bound, and it is free

otherwise. We say that terms

and are

-equivalent

if they differ only in the names of the bound variables.

If and

are

-terms and

is a variable, then we

define the substitution of

for in

by:

-

;

-

if ;

-

for -terms

;

-

,

where

and

is not free in .

Definition 1.2.3 (beta-reduction).

The

-reduction relation is

the smallest relation

on

closed under the following rules:

-

-

if

,

then for all variables

and types

,

we have

,

-

and

as a

-term,

then

and

.

We also define -equivalence

as the smallest equivalence

relation containing .

Example 1.2.4 (Informal).

We have .

When we reduce , the term

being reduced is called a -redex,

and the result is its -contraction.

Lemma 1.2.5 (Free variables lemma).

Assuming that:

Then

-

(1)

If ,

then .

-

(2)

The free variables of

occur in .

-

(3)

There is a context

comprising exactly the free variables in ,

with .

Lemma 1.2.6 (Generation Lemma).

-

(1)

For every variable

,

context ,

and

type ,

if

,

then

;

-

(2)

If

,

then there is a type

such that

and

;

-

(3)

If

,

then there are

types

and

such that

and

.

Lemma 1.2.7 (Substitution Lemma).

-

(1)

If

and

is a

type variable, then

;

-

(2)

If

and

,

then

.

Proposition 1.2.8 (Subject reduction).

Assuming that:

Proof.

By induction on the derivation of ,

using Lemma 1.2.6 and Lemma 1.2.7. □

Theorem 1.2.9 (Church-Rosser for lambda(->)).

Assuming that:

Then there is a

-term

such

that

,

, and

.

Pictorially:

Definition (-normal form).

A -term

is in -normal

form if there is no term

such that .

Proposition 1.2.11 (Uniqueness of types).

-

(1)

If

and

,

then

.

-

(2)

If

,

,

and

,

then

.

Example 1.2.12.

There is no way to assign a type to

. If

is of type

, then in

order to apply

to , it has to

be of type

for some .

But .

Definition 1.2.13 (Height).

The height function is the recursively defined map

that maps a type variable to ,

and a function type

to .

We extend the height function from types to -redexes

by taking the height of its -abstraction.

Not.: .

Theorem 1.2.14 (Weak normalisation for lambda(->)).

Assuming that:

Proof (“Taming the Hydra”).

The idea is to apply induction on the complexity of

. Define

a function

by

|

|

where

is the greatest height of a redex in ,

and

is the number of redexes in

of that height.

We will use induction over

to show that if

is typable, then it admits a reduction to -normal

form.

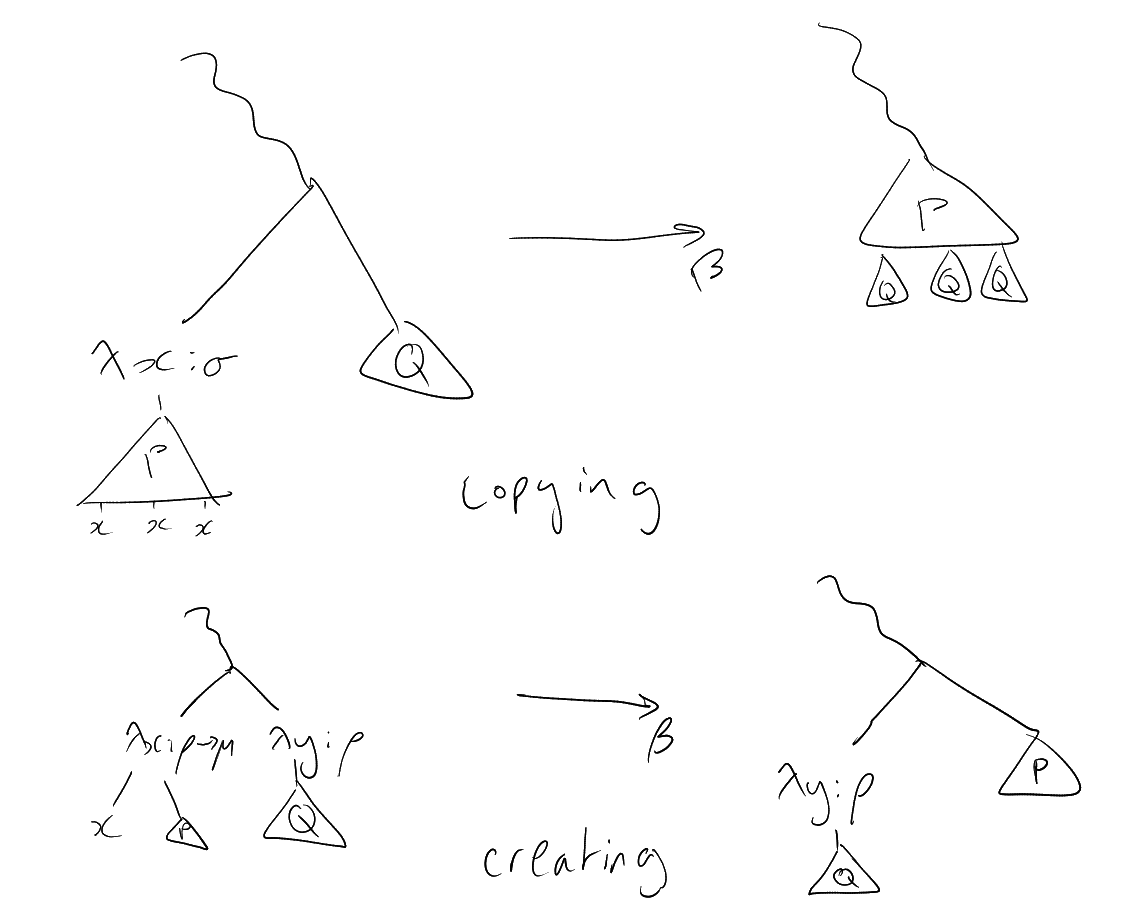

Problem: reductions can copy redexes or create new ones.

Strategy: always reduce the right most redex of maximum height.

We will argue that by following this strategy, any new redexes we generate have to be lower than the

height of the redex we picked to reduce.

If and

is already in

-normal form, then claim

is trivially true. If is

not in -normal form, let

be the rightmost redex

of maximal height .

By reducing ,

we may introduce copies of existing redexes, or create new ones. Creation of new redexes of

has to

happen in one of the following ways:

-

(1)

If

is of the form ,

then it reduces to ,

in which case there is a new redex of height .

-

(2)

We have

occurring in

in the scenario .

Say

reduces to .

Then we create a new redex of height .

-

(3)

The last possibility is that ,

and that it occurs in

as .

Reduction then gives the redex

of height .

Now itself is gone

(lowering the count by ),

and we just showed that any newly created redexes have height

.

If we have and

contains multiple free

occurrences of , then all the

redexes in are multiplied

when performing -reduction.

However, our choice of ensures that

the height of any such redex in

has height , as they

occur to the right of in

. It is this always the case that

(in the lexicographic order), so

by the induction hypothesis,

can be reduced to -normal

form (and thus so can ).

□

Theorem 1.2.15 (Strong Normalisation for lambda(->)).

Assuming that:

Then there is no infinite reduction sequence

.

Proof.

See Example Sheet 1. □

1.3 The Curry-Howard Correspondence

Propositions-as-types: idea is to think of

as the “type of its proofs”.

The properties of the STC

match the rules of IPC rather precisely.

First we will show a correspondence between

and the implication fragment IPC

of IPC that includes only the

connective, the axiom scheme, and the

and

rules. We will later extend this to the whole of IPC by introducing more complex types to

.

Start with IPC and build

a STC out of it whose

set of type variables

is precisely the set of primitive propositions of the logic.

Clearly, the set

of types then matches the set of propositions in the logic.

Comment:

if is not

free in .

Proposition 1.3.1 (Curry-Howard for IPC(->)).

Assuming that:

Then

-

(1)

If ,

then

-

(2)

If ,

then there is a simply typed -term

such that .

Proof.

-

(1)

We induct over the derivation of .

If

is a variable not occurring in

and the derivation is of the form ,

then we’re supposed to prove that .

But that follows from

as .

If the derivation has

of the form

and ,

then we must have .

By the induction hypothesis, we have that ,

i.e. .

But then

by (-I).

If the derivation has the form ,

then we must have

and .

By the induction hypothesis, we have that

and ,

so

by (-E).

-

(2)

Again, we induct over the derivation of .

Write .

Then we only have a few ways to construct a proof at a given stage. Say the derivation is of the form

.

If ,

then clearly ,

and if

then .

Suppose the derivation is at a stage of the form

Then by the induction hypothesis, there are -terms

and

such that

and ,

from which .

Finally, if the stage is given by

then we have two sub-cases:

-

If ,

then the induction hypothesis gives

for some term .

By weakening, we have ,

where

does not occur in .

But then

as needed.

-

If ,

then the induction hypothesis gives

for some ,

thus

as needed. □

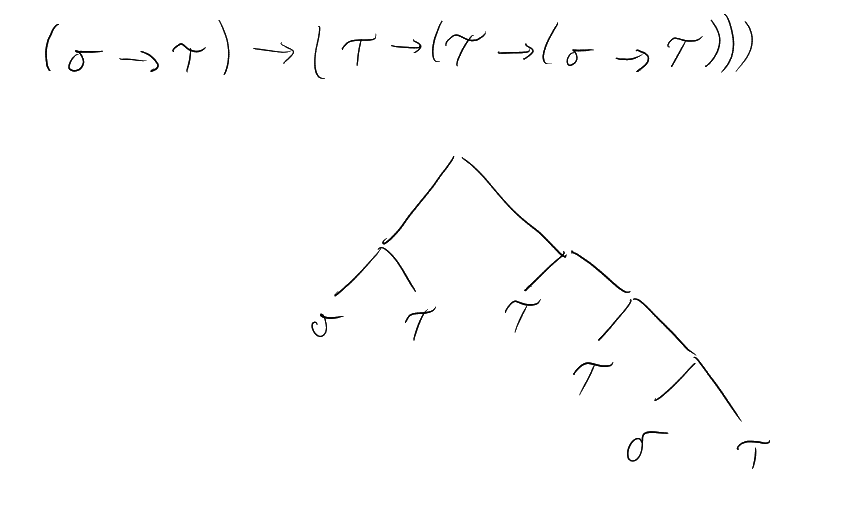

Example 1.3.2.

Let be

primitive propositions. The -term

|

|

has type ,

and therefore encodes a proof of that proposition in IPC().

,

.

| |

(toE) |

|

|

| |

(toE) |

|

|

| | (toI, φ→ψ) |

|

|

| | (toI, (φ→ψ) →φ) |

|

|

| |

Definition 1.3.3 (Full STlambdaC).

The types of the full simply typed

-calculus

are generated by the following grammar:

where

is a set of type variables (usually countable).

Its terms are given by

given by:

|

|

where

is an infinite set of variables, and

is a constant.

We have new typing rules:

-

-

-

-

-

-

-

-

for each

They come with new reduction rules:

-

Projections:

and

-

Pairs:

-

Definition by cases:

and

-

Unit: If ,

then

When setting up Curry-Howard with these new types, we let:

Example 1.3.4.

Consider the following proof of :

| |

() |

|

|

| | () |

|

|

| |

We decorate this proof by turning the assumptions into variables and following the Curry-Howard

correspondence:

| |

() |

|

|

| | () |

|

|

| |

| STC |

IPC |

|

|

| (primitive) types |

(primitive) propositions |

| variable |

hypothesis |

| ST-term | proof |

| type constructor | logical connective |

| term inhabitation |

provability |

| term reduction |

proof normalisation |

1.4 Semantics for IPC

Definition 1.4.1 (Lattice).

A lattice is a set

equipped with binary commutative and associative operations

and

that

satisfy the absorption laws:

for all .

A lattice is:

-

Distributive if

for all .

-

Bounded if there are elements

such that

and .

-

Complemented if it is bounded and for every

there is

such that

and .

A Boolean algebra is a complemented distributive lattice.

Note that

and

are idempotent in any lattice. Moreover, we can define an ordering on

by

setting

if .

Proposition 1.4.3.

Assuming that:

Then is a partial order

with least element

,

greatest element

,

and for any

,

we have

and

.

Conversely, every partial order with all finite infs and sups is a

bounded lattice.

Classically, we say that

if for every valuation

with for

all we

have .

We might want to replace

with some other Boolean algebra to get a semantics for IPC, with an accompanying Completeness Theorem.

But Boolean algebras believe in the Law of Excluded Middle!

Definition 1.4.4 (Heyting algebra).

A Heyting algebra is a bounded lattice equipped with a binary operation

such

that

for all .

A morphism of Heyting algebras is a function that preserves all finite meets, finite joins, and .

Definition 1.4.6 (Valuation in Heyting algebras).

Let

be a Heyting algebra and

be a propositional language

with a set of primitive

propositions. An -valuation

is a function , extended

to the whole of

recursively by setting:

-

,

-

,

-

,

-

A proposition

is -valid

if for all

-valuations

, and is an

-consequence of a (finite)

set of propositions

if for all

-valuations

(written

).

Lemma 1.4.7 (Soundness of Heyting semantics).

Assuming that:

Then

implies

.

Proof.

By induction over the structure of the proof

.

-

(Ax)

As

for any

and .

-

(-I)

and we have derivations ,

,

with .

By the induction hypothesis, we have ,

i.e. .

-

(-I)

and so we must have .

By induction hypothesis, we have .

By the definition of ,

this implies ,

i.e. .

-

(-I)

and without loss of generality we have a derivation .

By the induction hypothesis we have ,

but ,

and hence .

-

(-E)

By the induction hypothesis, we have .

-

(-E)

We know that .

From ,

we derive

by definition of .

So if

and ,

then ,

as needed.

-

(-E)

By induction hypothesis: ,

and .

This last fact means that .

Now this is the same as

as Heyting algebras are distributive lattices (see Example Sheet 2), and this is

by the first two inequalities of this paragraph.

-

(-E)

If ,

then ,

in which case

for any

by minimality of

in .

□

Example 1.4.8.

The Law of Excluded Middle is not intuitionistically valid. Let

be a primitive proposition and consider the Heyting algebra given by the topology

on .

We can define a valuation

with ,

in which case .

So . Thus Soundness of Heyting

semantics implies that .

Example 1.4.9.

Peirce’s Law

is not intuitionistically valid.

Take the valuation on the usual topology of

that maps

to and

to

.

Classical completeness:

if and only if .

Intuitionistic completeness: no single finite replacement for

.

Definition (Lindenbaum-Tarski algebra).

Let

be a logical doctrine (CPC, IPC, etc),

be a propositional language, and

be an -theory. The

Lindenbaum-Tarski algebra

is built in the following way:

-

The underlying set of

is the set of equivalence classes

of propositions ,

where

when

and ;

-

If

is a logical connective in the fragment ,

we set

(should check well-defined: exercise).

We’ll be interested in the case ,

, and

.

Proof.

Clearly, and

inherit associativity and

commutativity, so in order for

to be a lattice we need only to check the absorption laws:

Equation ()

is true since

by (-I), and

also by

(-E).

Equation ()

is similar.

Now, for distributivity:

by (-E) followed

by (-E):

| |

(-E) |

|

|

| | (-E) |

|

|

| |

Conversely,

by (-E) followed

by (-I).

□

Proof.

We already saw that

is a distributive lattice, so it remains to show that

gives a Heyting implication, and that

is bounded.

Suppose that ,

i.e. . We want

to show that ,

i.e. .

But that is clear:

| |

|

|

|

| |

(hyp) |

|

|

| | (-I, ) |

|

|

| |

Conversely, if , then

we can prove :

| |

(-E) |

|

|

| |

(hyp) |

|

|

| | (-E) |

|

|

| |

So defining

provides a Heyting .

The bottom element of

is just :

if is any

element, then

by -E.

The top element is :

if is any

proposition, then

via

□

Theorem 1.4.12 (Completeness of the Heyting semantics).

A proposition is provable in IPC if and only if it is

-valid for every

Heyting algebra .

Proof.

One direction is easy: if ,

then there is a derivation in IPC, thus

for any Heyting algebra

and valuation ,

by Soundness of Heyting semantics. But then

and

is -valid.

For the other direction, consider the Lindenbaum-Tarski algebra

of the empty theory relative to IPC, which is a Heyting algebra by Lemma 1.4.11. We can define a

valuation

by extending ,

to all propositions.

As

is a valuation, it follows by induction (and the construction of )

that

for all propositions.

Now

is valid in every Heyting algebra, and so is valid in

in particular. So ,

hence ,

hence .

□

Given a poset , we

can construct sets

called principal up-sets.

Recall that is a

terminal segment if

for each .

Proposition 1.4.13.

If

is a poset, then the set

can be made into a Heyting algebra.

Proof.

Order the terminal segments by .

Meets and joins are

and ,

so we just need to define .

If ,

define .

If , we

have

which happens if for every

and

we have .

But

is a terminal segment, so this is the same as saying that .

□

Definition 1.4.14 (Kripke model).

Let

be a set of primitive propositions. A Kripke model is a tuple

where

is a poset (whose elements are called “worlds” or “states”, and whose ordering is called the “accessibility

relation”) and

is a binary relation (“forcing”) satisfying the persistence property: if

is such that

and ,

then .

Every valuation

on induces a Kripke

model by setting

is .

Definition 1.4.15 (Forcing relation).

Let

be a Kripke model for a propositional language. We define the extended forcing relation inductively as follows:

-

There is no

with

;

-

if and only if

and

;

-

if and only if

or

;

-

if and only if

implies

for every

.

It is easy to check that the persistence property extends to arbitrary propositions.

Moreover:

-

if and only if

for all .

-

if and only if for every ,

there exists

with .

Notation.

for

a proposition

if all worlds in

force .

Example 1.4.16.

Consider the following Kripke models:

In (1), we have ,

since and

. We also

know that ,

thus .

It is also the case that ,

yet , so

either.

In (2), since

can’t access a

world that forces .

Also either,

as

forces .

So .

In (3), . All

worlds force , and

. So to check the claim we

just need to verify that .

But that is the case, as

and .

Definition 1.4.17 (Filter).

A filter

on a lattice is

a subset of

with the following properties:

A filter is proper if .

A filter

on a Heyting algebra is prime if it is proper and satisfies: whenever

, we can

conclude that

or .

If is a proper filter and

, then there is a prime

filter extending that

still doesn’t contain

(by Zorn’s Lemma).

Lemma 1.4.19.

Assuming that:

Then there is a Kripke model

such that if and

only if

, for every

proposition .

Proof (sketch).

Let

be the set of all prime filters of ,

ordered by inclusion. We write

if and only if

for primitive propositions .

We prove by induction that

if and only if

for arbitrary propositions.

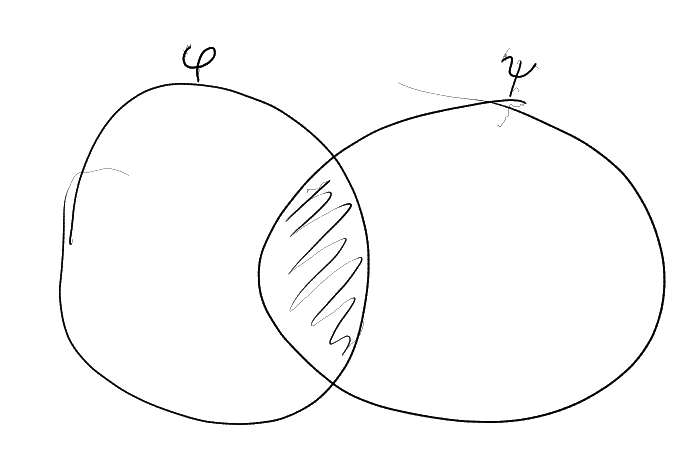

For the implication case, say that

and . Let

be the least

filter containing

and .

Then

|

|

Note that ,

or else

for some ,

whence

and so

(as

is a terminal segment).

In particular,

is proper. So let

be a prime filter extending

that does not contain

(exists by Zorn’s lemma).

By the induction hypothesis, ,

and since

and

(this )

contains ,

we have that .

But then ,

contradiction.

This settles that

implies .

Conversely, say that .

By the induction hypothesis, ,

and so

(as ).

But then ,

as

is a filter.

So the induction hypothesis gives ,

as needed.

The cases for the other connectives are easy (

needs primality). So

is a Kripke model. Want to show that

if and only if ,

for each .

Conversely, say ,

but .

Since ,

there must be a proper filter that does not contain it. We can extend it to a prime filter

that does not contain it, but then ,

contradiction. □

Theorem 1.4.20 (Completeness of the Kripke semantics).

Assuming that:

Then if and only if

for all Kripke models ,

the condition

implies .

Proof.

Soundness: induction over the complexity of .

Adequacy: Say .

Then

but

for some Heyting algebra

and -valuation

(Theorem 1.4.12). But then Lemma 1.4.19 applied to and

provides a Kripke model

such that ,

but ,

contradicting the hypothesis on every Kripke model. □

1.5 Negative translations

Definition 1.5.1 (Double-negation translation).

We recursively define the

-translation

of a

proposition

in the following way:

Lemma 1.5.2.

Assuming that:

Then the map

preserves

and

.

Proof.

Example Sheet 2. □

Lemma 1.5.3 (Regularisation).

Assuming that:

Then

Proof.

-

(1)

Give

the inherited order, so that ,

,

and

(which are preserved by )

remain the same. We just need to define disjunctions in

properly.

Define

in .

It is easy to show that this gives

in

(as

preserves order), so

is a Heyting algebra.

As every element of

is regular (i.e. ),

it is a Boolean algebra (see Example Sheet 2).

-

(2)

Given a Heyting homomorphism ,

where

is a Boolean algebra, define

as .

It clearly preserves ,

as those operations in

are inherited from .

But we also have

Thus is a morphism of Boolean

algebras. Note that any

provides an element ,

since in

.

Additionally,

for all

(as

is in a Boolean algebra).

Now, if is a morphism of

Boolean algebras with

for all ,

then

for all .

So

is unique with this property. □

In particular, if

is a set, then .

Theorem 1.5.4 (Glivenko’s Theorem).

Assuming that:

Then if

and only if .

Corollary 1.5.5.

Assuming that:

Then if

and only if .

Proof.

Induction over the complexity of formulae. □

Corollary 1.5.6.

CPC is inconsistent if and only if IPC is inconsistent.

Proof.

-

If CPC is inconsistent, then there is

such that

and .

But then

and ,

so .

-

Obvious. □