Entropy Methods in

Combinatorics

Lectured by Timothy Gowers

Contents

1 The Khinchin (Shannon?) axioms for entropy

Note.

In this course, “random variable” will mean “discrete random variable” (unless otherwise

specified).

All logarithms will be base

(unless otherwise specified).

Definition (Entropy).

The entropy of a discrete random variable

is a

quantity

that takes real values and has the following properties:

-

(i)

Normalisation: If

is uniform on

then .

-

(ii)

Invariance: If

takes values in ,

takes values in ,

is a bijection from

to ,

and for every

we have ,

then .

-

(iii)

Extendability: If

takes values in a set ,

and

is disjoint from ,

takes values in ,

and for all

we have ,

then .

-

(iv)

Maximality: If

takes values in a finite set

and

is uniformly distributed in ,

then .

-

(v)

Continuity:

depends continuously on

with respect to total variation distance (defined by the distance between

and

is ).

For the last axiom we need a definition:

Let and

be random variables.

The conditional entropy

of given

is

-

(vi)

Additivity: .

Lemma 1.1.

Assuming that:

Proof.

.

Since and

are independent, the

distribution of is

unaffected by knowing

(so by invariance, ),

so

for all ,

which gives the result. □

Corollary 1.2.

If

are independent, then

|

|

Proof.

Lemma 1.1 and obvious induction. □

Lemma 1.3 (Chain rule).

Assuming that:

Then |

|

Proof.

The case

is additivity. In general,

|

|

so we are done by induction. □

Lemma 1.4.

Assuming that:

Proof.

The map is

a bijection, and .

So the first statement follows by invariance. For the second statement:

Lemma 1.5.

Assuming that:

Then .

Proof.

and

are independent. Therefore, by Lemma 1.1, .

But by invariance, .

So .

□

Proposition 1.6.

Assuming that:

Then .

Proof.

Let

be independent random variables uniformly distributed on .

By Corollary 1.2 and normalisation, .

But

is uniformly distributed on ,

so by invariance, the result follows. □

Proposition 1.7.

Assuming that:

Then .

Reminder: here

is to the base

(which is the convention for this course).

Proof.

Let

be a positive integer and let

be independent copies of .

Then is

uniform on

and

Now pick

such that .

Then by invariance, maximality, and Proposition 1.6, we have that

So

|

|

Therefore,

as claimed. □

Notation.

We will write .

We will also use the notation .

Theorem 1.8 (Khinchin).

Assuming that:

Then |

|

Proof.

First we do the case where all

are rational (and then can finish easily by the continuity axiom).

Pick

such that for all ,

there is some

such that .

Let be uniform

on . Let

be a partition

of into sets with

. By invariance we

may assume that .

Then

Hence

|

|

By continuity, since this holds if all

are rational, we conclude that the formula holds in general. □

Corollary 1.9.

Assuming that:

Then

and .

Proof.

Immediate consequence of Theorem 1.8. □

Corollary 1.10.

Assuming that:

Then .

Proof.

.

But .

□

Proposition 1.11 (Subadditivity).

Assuming that:

Then .

Proof.

Note that for any two random variables

we have

Next, observe that

if is

uniform on a finite set. That is because

By the equivalence noted above, we also have that

if

is

uniform.

Now let and assume

that all are

rational. Pick such

that we can write

with each an

integer. Partition

into sets of

size . Let

be uniform on

. Without loss of generality

(by invariance) .

Let for each

. So

. Now define a

random variable

as follows: If ,

then , but then

is uniformly

distributed in and

independent of

(or if

you prefer).

So and

are conditionally

independent given ,

and is

uniform on .

Then

By continuity, we get the result for general probabilities. □

Corollary 1.12.

Assuming that:

Then .

Proof (Without using formula).

By Subadditivity, .

But .

□

Corollary 1.13.

Assuming that:

Then |

|

Proposition 1.14 (Submodularity).

Assuming that:

Proof.

Calculate:

Submodularity can be expressed in many ways.

Expanding using additivity gives the following inequalities:

Lemma 1.15.

Assuming that:

-

random variables

-

Proof.

Lemma 1.16.

Assuming that:

-

random variables

-

Then |

|

Proof.

Submodularity says:

|

|

which implies the result since

depends on

and .

□

Lemma 1.17.

Assuming that:

Then is

uniform.

Proof.

Let .

Then

The function

is concave on .

So, by Jensen’s inequality this is at most

|

|

Equality holds if and only if

is constant – i.e.

is uniform. □

Corollary 1.18.

Assuming that:

-

random variables

-

Then

and are

independent.

Proof.

We go through the proof of Subadditivity and check when equality holds.

Suppose that

is uniform on .

Then

with equality if and only if

is uniform on for all

(by Lemma 1.17),

which implies that

and are

independent.

At the last stage of the proof we used

|

|

where was uniform. So

equality holds only if

and are independent,

which implies (since

depends on )

that

and are

indpendent. □

Definition (Mutual information).

Let

and be random variables.

The mutual information

is

Subadditivity is equivalent to the statement that

and Corollary 1.18 implies that

if and only if

and are

independent.

Note that

|

|

Definition (Conditional mutual information).

Let

,

and

be random variables. The

conditional mutual information of

and

given ,

denoted by

is

Submodularity is equivalent to the statement that

.

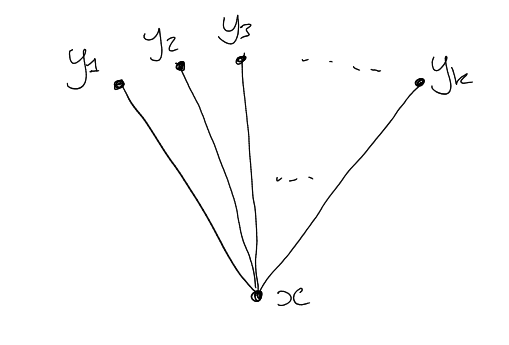

2 A special case of Sidorenko’s conjecture

Let be a bipartite

graph with vertex sets

and (finite)

and density

(defined to be ).

Let

be another (think of it as ‘small’) bipartite graph with vertex sets

and

and

edges.

Now let and

be random functions.

Say that is a

homomorphism if

for every .

Sidorenko conjectured that: for every ,

we have

|

|

Not hard to prove when

is . Also not hard

to prove when

is (use

Cauchy Schwarz).

Theorem 2.1.

Sidorenko’s conjecture is true if

is a path of length .

Proof.

We want to show that if

is a bipartite graph of density

with vertex sets

of size and

and we

choose ,

independently at random, then

|

|

It would be enough to let

be a P3 chosen uniformly at random and show that .

Instead we shall define a different random variable taking values in the set of all P3s (and then apply

maximality).

To do this, let

be a random edge of

(with ,

).

Now let

be a random neighbour of

and let

be a random neighbour of .

It will be enough to prove that

|

|

We can choose

in three equivalent ways:

-

(1)

Pick an edge uniformly from all edges.

-

(2)

Pick a vertex

with probability proportional to its degree ,

and then pick a random neighbour

of .

-

(3)

Same with

and

exchanged.

It follows that

with probability ,

so is

uniform in ,

so with

probability ,

so is

uniform in .

Therefore,

So we are done my maximality.

Alternative finish (to avoid using !):

Let be uniform in

and independent

of each other and .

Then:

So by maximality,

3 Brégman’s Theorem

Definition (Permanent of a matrix).

Let

be an matrix

over . The

permanent of ,

denoted , is

i.e. “the determinant without the signs”.

Let be a bipartite

graph with vertex sets

of size .

Given ,

let

|

|

ie is the bipartite

adjacency matrix of .

Then is the number of

perfect matchings in .

Brégman’s theorem concerns how large

can be if is a

-matrix and the sum

of entres in the -th

row is .

Let be a disjoint

union of s

for ,

with .

Then the number of perfect matchings in

is

Theorem 3.1 (Brégman).

Assuming that:

Then the number of perfect matchings in

is at most

Proof (Radhakrishnan).

Each matching corresponds to a bijection

such

that for

every .

Let be

chosen uniformly from all such bijections.

|

|

where

is some enumeration of .

Then

where

|

|

In general,

|

|

where

|

|

Key idea: we now regard

as a random enumeration of

and take the average.

For each , define

the contribution of

to be

where

(note that this “contribution” is a random variable rather than a constant).

We shall now fix . Let

the neighbours of

be .

Then one of the

will be , say

. Note that

(given

that ) is

|

|

All positions of are equally likely,

so the average contribution of

is

|

|

By linearity of expectation,

|

|

so the number of matchings is at most

Definition (-factor).

Let

be a graph with

vertices. A -factor

in

is a collection of

disjoint edges.

Theorem 3.2 (Kahn-Lovasz).

Assuming that:

Then the number of

-factors

in

is at

most

|

|

Proof (Alon, Friedman).

Let

be the set of -factors

of , and let

be a uniform

random element of .

For each ,

the union

is a collection of disjoint edges and even cycles that covers all the vertices of

.

Call such a union a cover of

by edges and even cycles.

If we are given such a cover, then the number of pairs

that could give

rise to it is ,

where

is the number of even cycles.

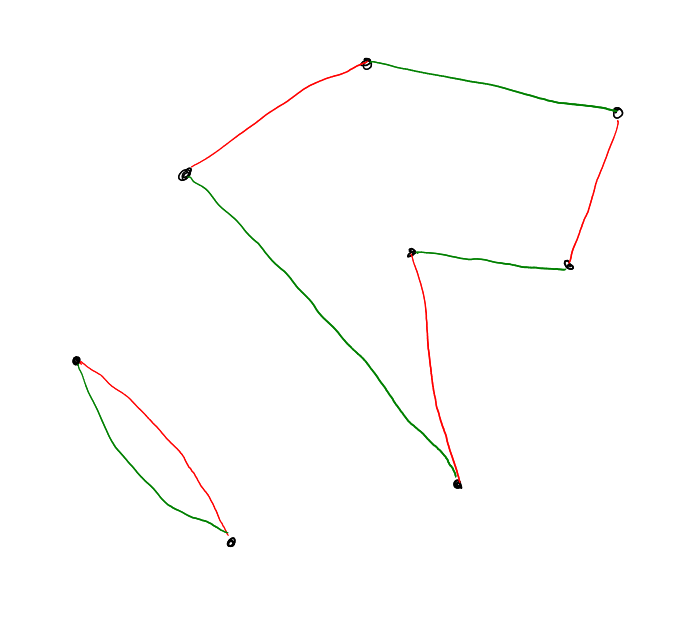

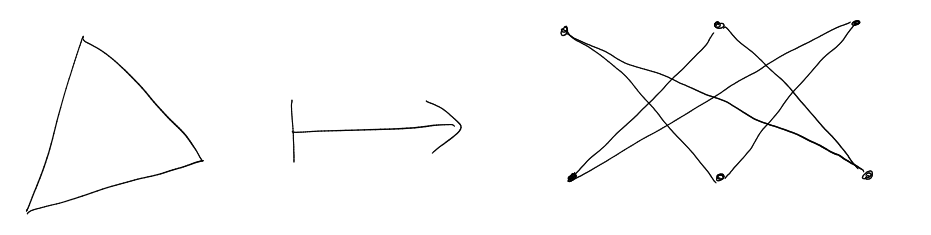

Now let’s build a bipartite graph

out of .

has two vertex

sets (call them ),

both copies of .

Join to

if and

only if .

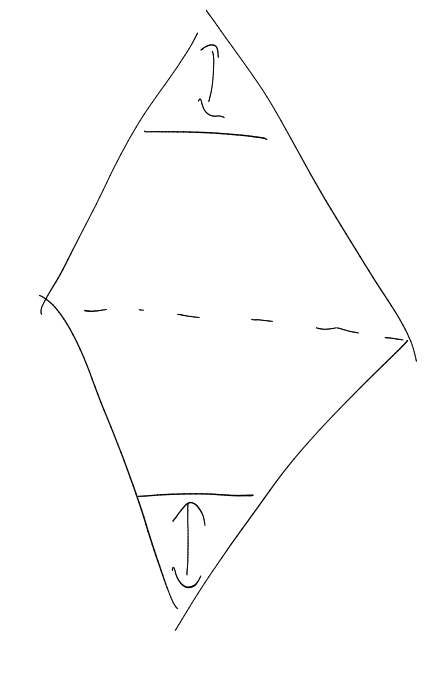

For example:

By Brégman, the number of perfect matchings in

is . Each matching

gives a permutation

of , such

that for

every .

Each such has a cycle decomposition,

and each cycle gives a cycle in .

So gives a

cover of

by isolated vertices, edges and cycles.

Given such a cover with

cycles, each edge can be directed in two ways, so the number of

that give

rise to is is ,

where

is the number of cycles.

So there is an injection from

to the set of matchings of ,

since every cover by edges and even cycles is a cover by vertices, edges and cycles.

So

|

|

4 Shearer’s lemma and applications

Notation.

Given a random variable

and with

, write

for the random

variable .

Lemma 4.1 (Shearer).

Assuming that:

-

a random variable

-

a family of subsets of

such that every

belongs to at least

of the sets

Then |

|

Proof.

For each ,

write

for .

For each ,

with

, we

have

Therefore,

Alternative version:

Lemma 4.2 (Shearer, expectation version).

Assuming that:

-

a random variable

-

a randomly chosen subset of ,

according to some probability distribution (don’t need any independence conditions!)

-

for each ,

Proof.

As before,

So

Definition ().

Let

and let .

Then we write

for the set of all

such that there exists

such that ,

where

is

suitably intertwined with

(i.e.

as functions).

Corollary 4.3.

Assuming that:

Proof.

Let be a uniform

random element of .

Then by Shearer,

But tkaes

values in ,

so

so

|

|

If we

get

|

|

This case is the discrete Loomis-Whitney theorem.

Theorem 4.4.

Assuming that:

Then has

at most

triangles.

Is this bound natural? Yes: if , and

we consider a complete graph on

vertices, then we get approximately

triangles.

Proof.

Let

be a random ordered triangle (without loss of generality

has a triangle so that this is possible).

Let be the number

of triangles in .

By Shearer,

|

|

Each edge is supported

in the set of edges ,

given a direction, i.e.

|

|

Definition.

Let

be a set of size

and let

be a set of graphs with vertex set .

Then

is -intersecting

(read as “triangle-intersecting”) if for all ,

contains a triangle.

Theorem 4.5.

Assuming that:

Then has size

at most .

Proof.

Let

be chosen uniformly at random from .

We write

for the set of (unordered) pairs of elements of .

Think of any

as a function from

to .

So .

For each ,

let be the

graph

For each , we shall look at the

projection , which we can think

of as taking values in the set .

Note that if ,

, then

, since

contains a triangle,

which must intersect

by Pigeonhole Principle.

Thus, is an intersecting

family, so it has size at most .

By Shearer, expectation version,

Definition (Edge-boundary).

Let

be a graph and let .

The edge-boundary

of

is the set of edges

such that .

If

or

and ,

then the -th

boundary

is the set of edges

such that ,

i.e.

consists of deges pointing in direction .

Theorem 4.6 (Edge-isoperimetric inequality in ).

Assuming that:

Then .

Proof.

By the discrete Loomis-Whitney inequality,

But

since each fibre contributes at least 2.

So

Theorem 4.7 (Edge-isoperimetric inequality in the cube).

Assuming that:

Then .

Proof.

Let be a uniform

random element of

and write .

Write

for . By

Shearer,

Hence

Note

|

|

The number of points of the second kind is ,

so .

So

Also, .

So we are done. □

Definition (Lower shadow).

Let

be a family of sets of size .

The lower shadow

is .

Notation.

Let

(for ).

Theorem 4.8 (Kruskal-Katona).

Assuming that:

Then .

Proof.

Let

be a random ordering of the elements of a uniformly random

. Then

Note that is an ordering

of the elements of some ,

so

|

|

So it’s enough to show

|

|

Also,

|

|

and

|

|

We would like an upper bound for . Our

strategy will be to obtain a lower bound for

in terms of .

We shall prove that

|

|

Let be chosen

independently of

with

|

|

(

will be chosen and optimised later).

Given ,

let

|

|

Note that and

have the same

distribution (given ),

so does

as well. Then

where

and .

It turns out that this is maximised when .

Then we get

|

|

This proves the claim.

Let .

Then

Since ,

it follows that

|

|

It follows that

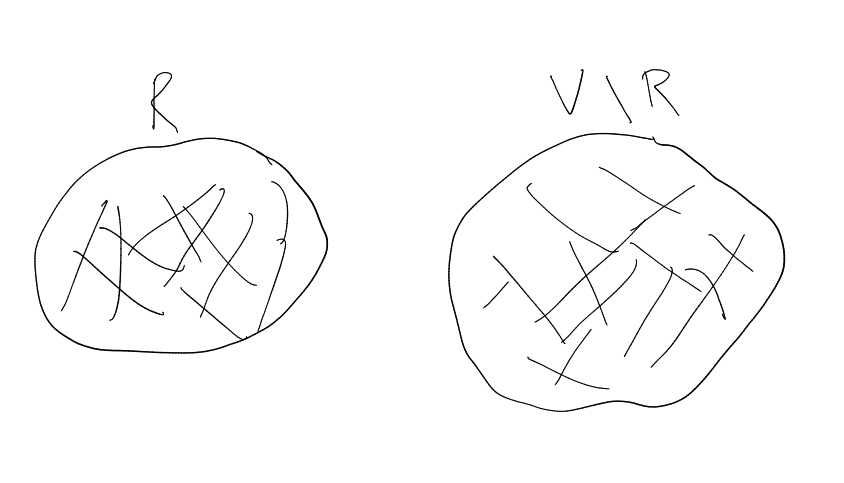

5 The union-closed conjecture

Definition (Union-closed).

Let

be a (finite) family of sets. Say that

is union closed if for any ,

we have .

Conjecture.

If is a non-empty

union-closed family, then there exists

that belongs to at least

sets in .

Theorem (Justin Gilmer).

There exists

such that if is a union-closed

family, then there exists

that belongs to at least

of the sets in .

Justin Gilmer’s constant was about .

His method has a “natural barrier” of .

We will briefly and “informally” discuss this.

A reason for this is that if we weaken the property union-closed to “almost union-closed” (if

we pick two elements randomly, then with high probability the union is in the family), then

is the

right bound.

Let . With high

probability, if are

random elements of ,

then .

If then almost

all of is

.

One of the roots of the quadratic

is .

If we want to prove Justin Gilmer’s Theorem, it is natural to let

be independent uniformly

random elements of

and to consider .

Since is

union-closed, , so

. Now we would like to

get a lower bound for

assuming that no

belongs to more than

sets in .

|

|

Lemma 5.1.

Assuming that:

Then .

Proof.

Think of as

characteristic functions. Write

for etc. By the Chain rule it is

enough to prove for every

that

|

|

By Submodularity,

|

|

For each

write ,

.

Then

|

|

which by hypothesis is at least

|

|

So

|

|

But

|

|

and

|

|

Similarly for the other term, so the RHS equals

|

|

which by hypothesis is greater than

|

|

as required. □

This shows that if is

union-closed, then ,

so . Non-trivial

as long as .

We shall obtain . We start by proving

the diagonal case – i.e. when .

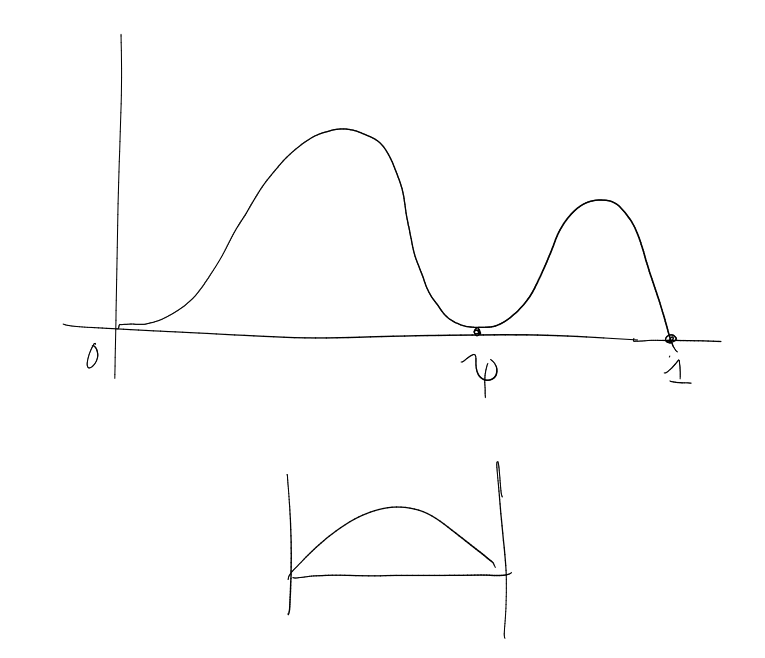

Lemma 5.2 (Boppana).

For every ,

Proof.

Write

for .

Then ,

so and

, so

. Equality

also when .

Toolkit:

Let .

Then

So

This is zero if and only if

|

|

which simplifies to

|

|

Since this is a cubic with negative leading coefficient and constant term, it has a negative root, so it has at most two roots in

. It follows (using Rolle’s

theorem) that has at

most five roots in ,

up to multiplicity.

But

|

|

So , so

has a double

root at .

We can also calculate (using ):

So there’s a double root at .

Also, note .

So is either

non-negative on all of or

non-positive on all of .

If is

small,

so there exists

such that .

□

Lemma 5.3.

The function

is minimised on

at a point where .

Proof.

We can extend continuously

to the boundary by setting

whenever

or is

or

. To see this, note first

that it’s valid if neither

nor is

.

If either

or is

small, then

So it tends to

again.

One can check that ,

so is minimised

somewhere in .

Let be a

minimum with .

Let and

note that

|

|

Also,

|

|

with equality at . So the partial

derivatives of LHS are both

at .

So . So it’s enough

to prove that is

an injection. ,

so

Differentiating gives

|

|

The numerator differentiates to , which

is negative everywhere. Also, it equals

at . So

it has a constant sign. □

Combining this with Lemma 5.2, we get that

|

|

This allows us to take .

6 Entropy in additive combinatorics

We shall need two “simple” results from additive combinatorics due to Imre Ruzsa.

Definition (Sum set / difference set / etc).

Let

be an abelian group and let .

The sumset

is the set .

The difference set

is the set .

We write

for ,

for ,

etc.

Definition (Ruzsa distance).

The Ruzsa distance

is

Lemma 6.1 (Ruzsa triangle inequality).

.

Proof.

This is equivalent to the statement

|

|

For each ,

pick ,

such

that .

Define a map

Adding the coordinates of

gives , so we

can calculate

(and ) from

, and hence

can calculate .

So is an

injection. □

Lemma 6.2 (Ruzsa covering lemma).

Assuming that:

-

an abelian group

-

finite subsets of

Then can be

covered by at most

translates of .

Proof.

Let

be a maximal subset of

such that the sets

are disjoint.

Then if ,

there exists

such that .

Then .

So can be

covered by

translates of .

But

|

|

Let ,

be discrete random variables taking values in an abelian group. What is

when

and

are

independent?

For each ,

.

Writing

and for

and

respectively,

this givesim

where

and .

So, sums of independent random variables

convolutions.

Definition (Entropic Ruzsa distance).

Let

be an abelian group and let ,

be

-valued random variables.

The entropic Ruzsa distance

is

|

|

where ,

are independent copies of

and .

Lemma 6.3.

Assuming that:

-

,

are finite subsets of

-

,

are uniformly distributed on ,

respectively

Proof.

Without loss of generality ,

are

indepent. Then

Lemma 6.4.

Assuming that:

Then |

|

Proof.

By symmetry we also have

|

|

Corollary.

Assuming that:

Then:

|

|

Corollary 6.5.

Assuming that:

Proof.

Without loss of generality ,

are independent.

Then ,

so

Lemma 6.6.

Assuming that:

Then if and only if there

is some (finite) subgroup

of such that

and

are uniform

on cosets of .

Proof.

-

If ,

are uniform

on ,

, then

is uniform

on ,

so

|

|

So .

-

Suppose that ,

are independent and .

From the first line of the proof of Lemma 6.4, it follows that

. Therefore,

and

are independent.

So for every

and every ,

|

|

where ,

, i.e. for

all ,

|

|

So

is constant on .

In particular, .

By symmetry, .

So

for any .

So for every ,

,

,

so .

So

is the same for every .

Therefore,

for every .

It follows that

|

|

So

is a subgroup. Also, ,

so

is a coset of .

,

so

is also a coset of .

□

Recall Lemma 1.16: If ,

then:

|

|

Lemma 6.7 (The entropic Ruzsa triangle inequality).

Assuming that:

Then |

|

Proof.

We must show that (assuming without loss of generality that

,

and

are

independent) that

|

|

i.e. that

|

|

Since is a function

of and is also

a function of ,

we get using Lemma 1.16 that

|

|

This is the same as

|

|

By independence, cancelling common terms and Subadditivity, we get ().

□

Lemma 6.8 (Submodularity for sums).

Assuming that:

Then |

|

Proof.

is a

function of and

also a function of .

Therefore (using Lemma 1.16),

|

|

Hence

|

|

By independence and cancellation, we get the desired inequality. □

Lemma 6.9.

Assuming that:

Proof.

Let ,

,

be independent

copies of .

Then

(as are all

copies of ).

□

Corollary 6.10.

Assuming that:

Proof.

Conditional Distances

Definition (Conditional distance).

Let

be -valued random

variables (in fact,

and don’t have

to be -valued

for the definition to make sense). Then the conditional distance is

|

|

The next definition is not completely standard.

Definition (Simultaneous conditional distance).

Let

be

-valued random variables. The

simultaneous conditional distance of

to given

is

|

|

We say that ,

are conditionally

independent trials of ,

given

if:

-

is distributed like .

-

is distributed like .

-

For each ,

is distributed like ,

-

For each ,

is distributed like .

-

and

are independent.

Then

|

|

(as can be seen directly from the formula).

Lemma 6.11 (The entropic BSG theorem).

Assuming that:

Then |

|

Remark.

The last few terms look like .

But they aren’t equal to it, because

and

aren’t (necessarily) independent!

Proof.

|

|

where ,

are conditionally

independent trials of ,

given

. Now

calculate

Similarly, is

the same, so

is also the same.

|

|

Let and

be conditionally

independent trials of

given .

Then .

By Submodularity,

Finally,

Adding or subtracting as appropriate all these terms gives the required inequality. □

7 A proof of Marton’s conjecture in

We shall prove the following theorem.

Theorem 7.1 (Green, Manners, Tao, Gowers).

There is a polynomial

with the following

property:

If and

is such that

, then there is a

subspace of size at

most such that

is contained in the union

of at most translates

of . (Equivalently,

there exists ,

such

that ).

This is known as “Polynomial Freiman–Ruzsa”.

In fact, we shall prove the following statement.

Theorem 7.2 (Entropic Polynomial Freiman–Ruzsa).

There exists an absolute constant

satisfying the

following: Let

and let be

-valued random variables.

Then there exists a subsgroup

of such

that

|

|

where is the uniform

distribution on .

Lemma 7.3.

Assuming that:

Then there exists

such that .

Proof.

If not, then

|

|

contradiction. □

Proposition 7.4.

Theorem 7.2 implies Theorem 7.1.

Proof.

Let ,

. Let

and

be independent

copies of . Then by

Theorem 7.2, there exists

(a subgroup) such that

|

|

so

But

So .

Therefore

Therefore, by Lemma 7.3, there exists

such that

|

|

But

|

|

(using characteristic 2). So there exists

such that

|

|

Let . By the Ruzsa covering

lemma, we can cover

by at most

translates of .

But so

, so

can be covered

by at most

translates of .

But using ,

So

|

|

Since is

contained in ,

so , so

|

|

If then we are done.

Otherwise, since ,

so .

Pick a subgroup

of of size

between

and . Then

is a union

of at most

translates of ,

so is a union

of at most

translates of .

□

Now we reduce further. We shall prove the following statement:

Theorem 7.5 (EPFR).

There is a constant

such that if and

are any two

-valued random

variables with , then

there exists -valued

random variables

and

such that

|

|

Proof.

By compactness we can find ,

such

that

|

|

is minimised. If

then by EPFR()

there exist ,

such that .

But then

Contradiction.

It follows that .

So there exists

such that and

are uniform

on cosets of ,

so

|

|

which gives us EPFR().

□

Definition.

Write

for

|

|

Definition.

Write

for

|

|

Remark.

If we can prove EPFR

for conditional random variables, then by averaging we get it for some pair of random variables (e.g. of the

form

and ).

Lemma 7.7 (Fibring lemma).

Assuming that:

Then |

|

Proof.

But the last line of this expression equals

|

|

We shall be interested in the following special case.

Corollary 7.8.

Assuming that:

Then

Proof.

Apply Lemma 7.7 with ,

and .

□

We shall now set .

Recall that Lemma 6.11 says

|

|

Equivalently,

|

|

Applying this to the information term (),

we get that it is at least

which simplifies to

So Corollary 7.8 now gives us:

Now apply this to ,

and

and

add.

We look first at the entropy terms. We get

where we made heavy use of the observation that if

are some

permutation of ,

then

|

|

This also allowed use e.g. to replace

|

|

by

|

|

Therefore, we get the following inequality:

Lemma 7.9.

Now let

be copies of

and copies of

and apply

Lemma 7.9 to

(all independent), to get this.

Lemma 7.10.

Assuming that:

-

satisfy:

and

are copies of ,

and

are copies of ,

and all of them are independent

Then

Recall that we want

such that

Lemma 7.10 gives us a collection of distances (some conditioned), at least one of which is at most

. So it

will be enough to show that for all of them we get

|

|

for some absolute constant .

Then we can take .

Definition (-relevant).

Say that is

-relevant

to if

|

|

Proof.

.

□

Lemma 7.12.

Assuming that:

Then |

|

Proof.

Corollary 7.13.

Assuming that:

-

-

are independent copies of

Proof.

□

Corollary 7.14.

is -relevant

to .

Proof.

is -relevant

to ,

so by Corollary 7.13 we’re done. □

Corollary.

Assuming that:

Proof.

By Lemma 7.12,

Corollary 7.15.

Assuming that:

Proof.

Similarly for .

□

Lemma 7.16.

Assuming that:

Then |

|

Proof.

But , so

it’s also

|

|

Averaging the two inequalities gives the result (as earlier). □

Corollary 7.17.

Assuming that:

Then

Proof.

Use Lemma 7.16. Then as soon as it is used, we are in exactly the situation we were in when

bounding the relevance of

and .

□

It remains to tackle the last two terms in Lemma 7.10. For the fifth term we need to bound

|

|

But first term of this is at most (by Lemma 7.12)

|

|

By The entropic Ruzsa triangle inequality and independence, this is at most

Now we can use Lemma 7.16, and similarly for the other terms.

In this way, we get that the fifth and sixth terms have relevances bounded above by

for an absolute

constant .

˙

Index

-relevant

additivity

entropy

conditional mutual information

conditionally independent trials

continuity

entropy

extendability

Justin Gilmer’s Theorem

invariance

maximality

mutual information

normalisation

-factor

discrete Loomis-Whitney

-intersecting

union-closed