Analytic Number

Theory

Daniel Naylor

Contents

What is analytic number theory?

-

Study of number-theoretic problems using analysis (real, complex, Fourier, …)

-

Also tools from combinatorics, probability, …

What kind of problems are studied?

A variety of problems about integers, especially primes.

-

Are there infinitely many primes? (Euclid, 300BC)

-

Are there infinitely many primes starting with 7 in base 10? (follows from prime number theorem)

-

Are there infinitely many primes ending with 7 in base 10? (follows from Dirichlet’s theorem)

-

Are there infinitely many primes with 49% of the digits being 7 in base 10? would follow from

the Riemann hypothesis

-

Are there infinitely many pairs of primes differing by 2? (twin prime conjecture)

Key feature: To show that a set (of primes) is infinite, want to estimate the number of elements

.

Definition.

Define

|

|

Euclid showed: .

Theorem (Prime number theorem).

|

|

.

(Conjectured: Legendre, Gauss. Proved: Hadamard, de la Vallée Poussin)

1 Estimating Primes

Theorem (Euler).

.

Proof.

Consider ,

for . We

have:

On the other hand, using ,

so

Comparing these two bounds gives

|

|

Then letting

gives the desired result. □

Theorem (Chebyshev’s Theorem).

(for ,

where

is an absolute constant).

Proof.

Consider

for . We

have

|

|

On the other hand,

where is

the largest

such that .

We have for

. So

|

|

Take ,

for .

Hence

|

|

Then

So for and a suitable

large enough constant ,

we have

|

|

Hence

for any , as

long as .

Take .

Choose

large enough. □

1.1 Asymptotic Notation

Definition 1.1 (Big and little notation).

Let ,

.

Write

if there is

such that

for all .

Write

if for any

there is

such that

for ,

.

Write

if

and write

if .

Definition 1.2 (Vinogradov notation).

Let ,

.

Write

or

if .

Example.

-

(),

since ,

.

-

(for ).

-

for ,

since .

-

for

(since ).

-

(for ).

Lemma.

Let .

-

(i)

If

and ,

then

(transitivity).

-

(ii)

If

and ,

then .

-

(iii)

If

and ,

then .

Proof.

Follows from the definition in a straightforward way. Example:

-

(iii)

,

.

Then ,

so .

□

1.2 Partial Summation

Lemma 1.3 (Partial Summation).

Assuming that:

Then |

|

where for ,

we define

|

|

Proof.

It suffices to prove the

case, since then

|

|

Suppose .

By the fundamental theorem of calculus,

|

|

Summing over ,

we get

Lemma.

If ,

then

|

|

where is Euler’s constant,

which is given by .

Proof.

Apply Partial Summation with ,

,

. Clearly

.

Then,

The last equality is true since .

Let .

Then we have the asymptotic equation as desired.

Taking in the

formula, we see that

is equal to the formula for Euler’s constant, as desired. □

Lemma.

For ,

|

|

Proof.

Apply Lemma 1.3 with

(where

is the set of primes), ,

and .

We get ,

and then

1.3 Arithmetic Functions and Dirichlet convolution

Definition (Arithmetic function).

An arithmetic function is a function .

Definition (Multiplicative).

An arithmetic function

is multiplicative if

and

whenever

are coprime.

Moreover,

is completely multiplicative if

for all .

On the space of arithmetic functions, we have operations:

Definition (Dirichlet convolution).

For ,

we define

|

|

where

means sum over the divisors of .

Proof.

Since arithmetic functions with

form an abelian group, it suffices to show:

-

(i)

-

(ii)

-

(iii)

-

(iv)

Proofs:

Proof.

Need

such that .

|

|

Assume defined

for . We will

defined .

Proof.

For the first part, suffices to show closedness. Let

be multiplicative, and

coprime.

If , we can

write ,

where

,

,

and

.

Therefore we have:

(also need to check that inverses are multiplicative).

Now, remains to show that for completely multiplicative

,

.

Note that is multiplicative by the

first part. So enough to show

for prime powers .

Calculate:

and for :

Example.

.

Then

So is

multiplicative by the previous result.

Definition (von Mangoldt function).

The von Mangoldt function

is

|

|

Then

since

|

|

Since is the

inverse of ,

we have

|

|

1.4 Dirichlet Series

For a sequence ,

we want to associate a generating function that gives information of

. Might

consider

If we do this, then

is hard to control. So this is not very useful.

The following series has nicer number-theoretic properties:

Definition (Formal series).

For ,

define a (formal) series

for .

Lemma.

Assuming that:

Then converges absolutely

for and defines an

analytic function for .

Proof.

Let

(fixed),

and .

Then

Hence we have absolute convergence for .

Also, is a uniform

limit of the functions .

From complex analysis, a uniform limit of analytic functions is analytic. Hence

is analytic

for .

Now let .

□

Theorem (Euler product).

Assuming that:

Then |

|

for .

Furthermore, if

is completely multiplicative, then

|

|

for .

Proof.

Let ,

. Let

|

|

Note that the series defining the factors are absolutely convergent, since

|

|

(geometric series).

Therefore, multiplying out,

|

|

where

|

|

The fundamental theorem of arithmetic tells us that

and

for .

Now,

(since ).

Hence .

Finally, for

completely multiplicative, use geometric formula:

|

|

Lemma.

Assuming that:

-

-

Proof.

We know

and are

absolutely convergent, so can expand out the product.

Definition (Riemann zeta function).

For ,

define

Example.

-

for

(since ).

-

for

(since

is the Dirichlet inverse of ,

so ).

-

for ,

since .

Can differentiate termwise, since if

analytic and

uniformly, then

is analytic and .

We know that

converges uniformly for

if .

-

for .

This is because

– see the definition of the von Mangoldt function.

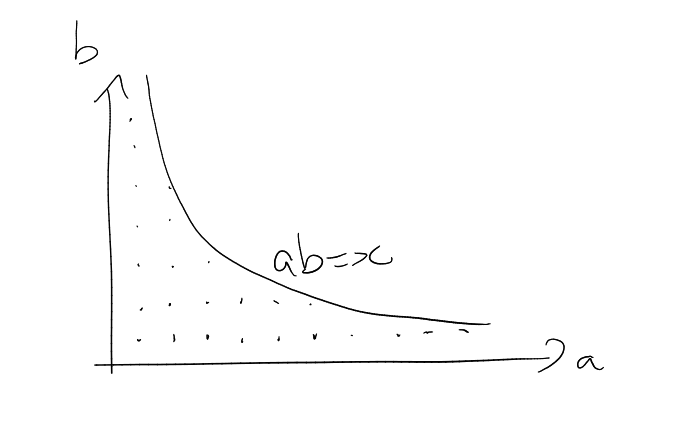

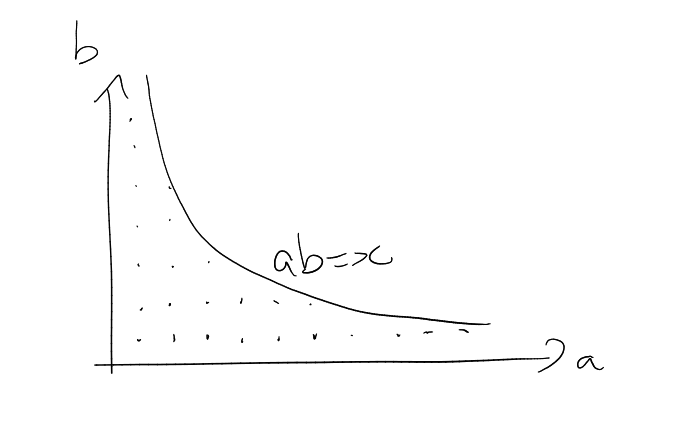

Dirichlet hyperbola method

Problem: How many lattice points

satisfy ?

Note that this number is .

Dirichlet proved that for ,

|

|

where

is Euler’s constant.

We will see a proof of this shortly.

Conjecture: Can have .

Current best exponent is .

First, we prove a lemma:

Lemma (Dirichlet hyperbola method).

Assuming that:

Then |

|

Proof.

.

Split this sum into parts with

and

to get the conclusion. □

Theorem 1.5 (Dirichlet’s divisor problem).

For

,

|

|

where

is Euler’s constant.

Proof.

We use the Dirichlet hyperbola method, with

. Note

that .

Then,

Recall from Lecture 2 that

|

|

Taking ,

the previous expression becomes

|

|

2 Elementary Estimates for Primes

Recall from Lecture 1:

2.1 Merten’s Theorems

Theorem 2.1 (Merten’s Theorem).

Assuming that:

Then

-

(i)

-

(ii)

(for some )

-

(iii)

(for some )

Remark.

Can show .

Proof.

-

(i)

Let .

Consider .

We have

(the second inequality cn be proved by induction, using

).

Let be the

largest power of

dividing .

Then

We have .

Indeed,

Now,

Combine with

to get

|

|

Divide by

to get the result.

-

(ii)

By Partial Summation, this is

|

|

Writing ,

we get

By part (i),

Take .

-

(iii)

Use Taylor expansion

|

|

to get

Write .

We get

Taking exponentials,

|

|

2.2 Sieve Methods

Definition (Sieve problem).

Let .

Let

and let .

Denote

|

|

Problem: Estimate .

Note that if ,

Sieve hypothesis: There exists a multiplicative

and

such that

|

|

for all square-free

(no repeated prime factors).

Example.

-

,

.

|

|

Then ,

.

|

|

-

,

. Let

,

where

are distinct primes. Then

(by Chinese Remainder Theorem).

Here,

|

|

and , where

is the number of

prime factors of

(distinct).

Lemma.

We have

|

|

Proof.

Recall that

(since is the

inverse of ).

Hence,

Example.

Let

|

|

Let .

Let .

Then

By the previous lemma,

For ,

|

|

Theorem (Sieve of Erastothenes – Legendre).

Assuming that:

Then

Proof.

Recall from previous lecture that

We estimate the first error term using Cauchy-Schwarz:

|

|

Note that if ,

, then

( – number of distinct

prime factors of ),

since .

Hence, ,

,

.

Now we get

(the last step is by Dirichlet’s divisor problem: ).

Substituting this in, the first error term becomes as desired.

Now we estimate the second error term. We have

Combining the error terms, the claim follows. □

Example.

Take ,

. Then

,

, so the

sieve gives us

By Merten’s Theorem,

Hence,

|

|

Hence, for ,

|

|

This asymptotic in fact holds for .

In particular, the Erastothenes-Legendre sieve gives

|

|

for . Not quite the

Chebyshev bound .

2.3 Selberg Sieve

| |

asymptotes |

good upper bound for primes |

| | |

| Erastothenes-Legendre | ✓ | ✗ |

| | |

| Selberg | ✗ | ✓ |

Theorem 2.2 (Selberg sieve).

Assuming that:

Then |

|

Sieve hypothesis: There is a multiplicative

and

such that

for all square-free .

Proof.

Let

be real numbers with

Then,

|

|

(If ,

get

otherwise use ).

Summing over ,

( means

).

We first estimate :

We have

Therefore

|

|

Now it suffices to prove that there is a choice of

satisfying ()

such that

Claim 1: .

Claim 2: for

all .

Proof of claim 1:

We have, writing ,

,

We have

|

|

(since )

so the previous expression becomes

(multiplicativity, ,

,

) since

(check

on primes ).

Now we get

|

|

where

|

|

Want to minimise this subject to ().

We need to translate the condition .

Note that

Hence,

|

|

Now,

By Cauchy-Schwarz, we then get

|

|

Hence

|

|

Equality holds for ,

where . We now check

that with these ,

for

.

Note that

|

|

Hence,

for .

This proves Claim 1.

Now we prove Claim 2 ().

Note that any has at most

one representation as ,

where ,

(for any

).

Now,

Now,

|

|

Substituting the lower bound for ,

|

|

since .

□

Lemma 2.3.

Assuming that:

-

-

-

for some

we have

|

|

Then |

|

where is defined

in terms of

as in Selberg’s sieve.

Proof.

Note that for any ,

|

|

Then

By Rankin’s trick,

Choose

and to

get the claim. □

The Brun-Titchmarsh Theorem

Theorem 2.4 (Brun-Titchmarsh Theorem).

Assuming that:

Then |

|

Remark.

We expect

|

|

in a wide range of

(e.g. for some

fixed). The prime number

theorem gives this for .

The Brun-Titchmarsh Theorem gives an upper bound of the expected order for

.

Proof.

Apply the Selberg sieve with ,

. Note that

for any ,

|

|

Hence, the sieve hypothesis holds with ,

.

Now, the function

in Selberg sieve is given on primes by

|

|

where

is the Euler totient function. In general,

(since is multiplicative).

Now, for any ,

Selberg sieve yields

|

|

By Problem 11 on Example Sheet 1, the error term is .

Take .

Then

|

|

(for ).

We estimate

since any

has at least one representation as

where is

square-free and

are primes and .

We have proved

|

|

for .

Putting everything together gives us

for .

□

3 The Riemann Zeta Function

Recall that the Riemann zeta function is defined by

for .

Remarkable properties:

-

(1)

extends meromorphically to .

-

(2)

A functional equation relating .

-

(3)

All the (non-trivial) zeroes appear to be on the line

(Riemann hypothesis).

-

(4)

closely relates to the distribution of primes.

Notation.

means .

means that the

constant in can

depend on .

Lemma 3.1.

Assuming that:

Then .

Proof.

By the Euler product formula,

|

|

hence

|

|

By the triangle inequality,

|

|

Hence,

|

|

Note that these products converge if and only if

converges, and this sum converges by the comparison test. □

Lemma 3.2 (Polynomial growth of in half-planes).

-

(i)

extends to a meromorphic function on ,

with the only pole being a simple pole which is at .

-

(ii)

Let

be an integer. Then, for

and

we have .

Proof.

Let

be an integer.

We claim that there exist polynomials ,

of degree

and such

that for ,

|

|

First assume ()

holds.

Then, since ,

for ,

,

()

gives .

So (ii) follows.

For (i), using analytic continuation and (),

it suffices to show that the RHS of ()

is meromorphic for ,

with the only pole a simple one at .

Suffices to show that

is analytic for .

This follows from the following criterion: If

is open,

is piecewise continuous, and if

is analytic in

for any ,

then

is analytic in ,

provided that

is bounded on compact subsets of .

Applying this with

concludes the proof of (i) assuming ().

We are left with proving ().

We use induction on .

Case :

By Partial Summation,

Take ,

.

Case

assuming :

Let

For , we

have

|

|

Let

|

|

This is a polynomial of degree .

By integration by parts,

|

|

Substituting this into the previous equation, we get that case

follows.

□

The Gamma function

Definition (Gamma function).

For ,

let

Lemma 3.3.

is analytic for .

Proof.

Apply the same criterion for integral of analytic function being analytic as in the previous

lemma, taking .

Note that for ,

|

|

Lemma (Functional equation for ).

The

function extends meromorphically to ,

with the only poles being simple poles at .

Moreover:

-

(i)

for .

-

(ii)

for all

(Euler reflection formula).

Proof.

-

(i)

For ,

by integration by parts,

|

|

This proves (i) for .

Now for any ,

for we

have

|

|

The RHS is analytic for ,

so can use analytic continuation to extend

meromorphically to ,

with the only poles being simple poles at .

Let .

-

(ii)

Since both sides are analytic in ,

by analytic continuation, it suffices to prove the formula for .

Now, for any ,

Multiply by

and integrate, and use Fubini to get

Hence, the remaining task is to show

|

|

We will do this next time.

□

Theorem 3.4 (Functional equation for ).

Assuming that:

Then is an entire

function and

for

.

Hence

|

|

for .

Proof.

Let .

Then,

|

|

Make the change of variables

to get

|

|

Hence

|

|

Summing over

and using Fubini,

By the functional equation

we have

Plugging this into (),

we get

|

|

Hence

|

|

Since ,

applying the criterion for integrals of analytic functions being analytic, we see that

is entire. So by analytic

continuation, ()

holds for all .

Moreover, the expression for

is symmetric with respect to ,

so ,

.

□

Corollary 3.5 (Zeroes and poles of ).

The function extends to a

meromorphic function in

and it has

-

(i)

Only one pole, which is a simple pole at ,

residue .

-

(ii)

Simple zeroes at .

-

(iii)

Any other zeroes satisfy .

Proof.

-

(ii) – (iii)

Follows from the lemma on polynomial growth of

on vertical lines.

-

(ii) – (iii)

We know

for .

We want to show that if

and

then

and

is a simple zero.

Let . By the functional

equation for ,

|

|

We claim that

for all .

By the Euler reflection formula,

|

|

for .

If ,

then

has a pole at .

Hence,

for some

integer. But then ,

and .

We conclude that for ,

Since the poles of

are simple, has

a simple zero at ,

.

□

3.1 Partial fraction approximation of

This is a formula for .

For the proof we need a lemma. We write, for ,

,

Lemma 3.6 (Borel-Caratheodory Theorem).

Assuming that:

Then |

|

Lemma 3.7 (Landau).

Assuming that:

Then for

,

|

|

where is the set

of zeroes of

in ,

counted with multiplicities.

Note.

If is

a polynomial, we can factorise

and then

Proof.

Let

|

|

Then is analytic and

non-vanishing in .

Note that

|

|

().

Hence, it suffices to prove

for .

Write

Then is analytic and

non-vanishing in ,

and .

We want to show

for .

For all

we have

|

|

since

|

|

for .

By the maximum modulus principle,

for , so

|

|

By the Borel-Caratheodory Theorem with radii ,

we have

for for ,

|

|

Now, for ,

Cauchy’s theorem gives us

Theorem 3.8 (Partial Fraction approximation of ).

Proof.

We apply Landau with ,

,

with .

By the lemma on polynomial growth of

on vertical lines, for ,

we have

().

Let .

Then Landau gives us

Since contains

all the points

with , it

suffices to show

|

|

Substituting

in (), we

get

since

Taking real parts,

|

|

Since ,

|

|

This proves part (ii). It gives also ()

since the sum there contains

zeros. □

3.2 Zero-free region

Proposition 3.9.

Assuming that:

Then |

|

Proof.

Recall that

|

|

where

is the von Mangoldt function function, and .

Taking linear combinations, the LHS of ()

becomes

|

|

().

We are done by the inequality:

|

|

for .

□

Theorem 3.10 (Zero-free region).

There is a constant

such that

whenever

. In

particular,

for .

Proof.

Let

and .

Suppose .

uwe know that .

We know that

has no zeroes in some ball

for some

(otherwise the entire function

would have an accumulation point for its zeros).

Choosing small enough,

we can assume that . By

the key inequality for ,

|

|

Apply partial fraction decomposition of .

So

|

|

().

Since for

any zero ,

|

|

().

Discarding terms, we get

|

|

Take , and

assume

to get

|

|

Take

to get a contradiction. □

Theorem 3.11 (Bounding ).

Assuming that:

Then |

|

Proof.

If

with ,

then

Assume then that .

Apply ().

Each term satisfies

We know that there are zeros with

multiplicity having imaginary part .

The claim follows from triangle inequality. □

˙

Index

arithmetic function

Chebyshev’s Theorem

completely multiplicative

Euler’s constant

multiplicative

sieve hypothesis

Euler’s Theorem

von Mangoldt function